Your Growing Webflow CMS is Great. So Why Aren't Your New Pages Getting Indexed?

You’ve done everything right. Your Webflow site is humming along, your blog is expanding, and your resource library is becoming the envy of your industry. You're adding dozens, maybe hundreds, of new CMS items, and your site is growing into a content powerhouse.

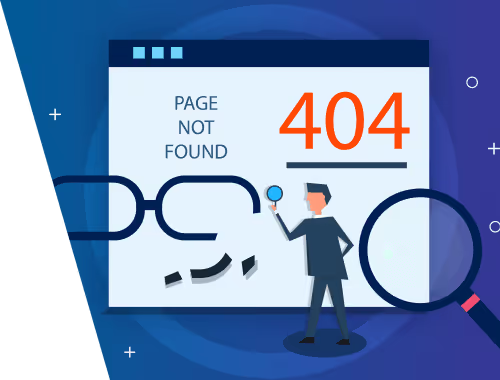

There’s just one problem. That brilliant new article you published last week? Google hasn't indexed it. The one from the week before? Nowhere to be found.

It’s a frustrating and surprisingly common scenario. As your Webflow CMS collection grows past a few hundred and into the thousands, you can unknowingly cross an invisible line a point where you start giving search engines like Google too much to do. This is where you run into the hidden challenge of managing a large-scale website: the crawl budget.

This guide will break down what crawl budget is, why it's critical for large Webflow sites, and how to manage it effectively. We'll turn complex SEO theory into actionable steps you can take right inside the Webflow Designer, ensuring your valuable content gets the attention it deserves.

Crawling vs. Indexing: The Two-Step Dance of Search Engines

Before we dive into crawl budget, let's clarify two terms that are often used interchangeably but mean very different things: crawling and indexing.

- Crawling is the discovery process. Googlebot (Google's web crawler) follows links to find new or updated pages on the internet. Think of it as a librarian walking through a massive library, simply taking note of every single book on every shelf.

- Indexing is the analysis and storage process. After a page is crawled, Google tries to understand what it's about, analyzes its content and quality, and stores it in its massive database (the index). Only indexed pages can appear in search results. Think of this as the librarian reading each book, categorizing it, and deciding where it should go in the library's official catalog so people can find it.

The key takeaway? A page can be crawled but not indexed. Google might see your page exists but decide it's not valuable, unique, or important enough to add to its index. For large sites, the challenge is ensuring Google crawls your most important pages first and sees them as worthy of indexing.

What is Crawl Budget (And Why Should Your Large Webflow Site Care)?

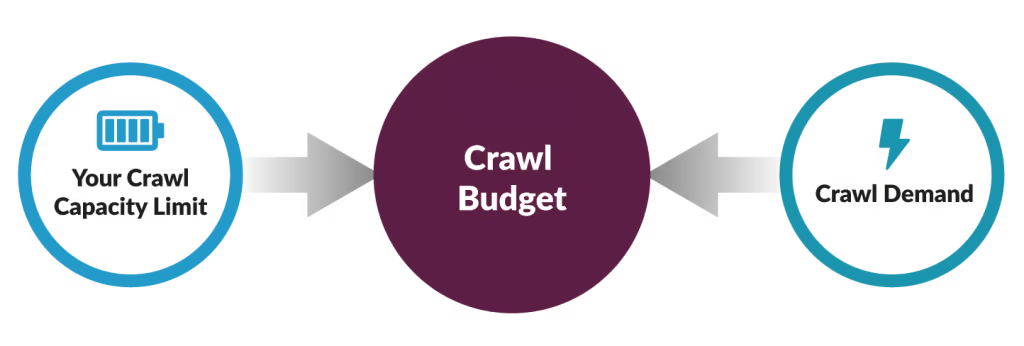

Google doesn't have unlimited resources. It can only spend so much time and energy crawling any single website. This finite resource is called the crawl budget.

Imagine giving a personal shopper a $100 budget to buy items from a gigantic department store.

- If the store is small and well-organized, they can easily find and buy the best items within their budget.

- If the store is massive, with thousands of low-value items, disorganized aisles, and confusing signs, the shopper might waste their entire budget on unimportant things before they even find the high-value designer section.

Your website is the department store, and Googlebot is the personal shopper. Your crawl budget is the $100.

For small Webflow sites with a few dozen pages, crawl budget is rarely an issue. Googlebot can easily visit every page without running out of resources. But once your CMS collections for blogs, products, or listings reach thousands of items, the game changes. Google might spend its entire budget crawling low-priority pages like tags, categories with only one post, or internal search results, leaving no budget left to discover your brand-new, high-value content.

Webflow's incredibly clean code and automatic sitemaps give you a great head start, but they don't exempt you from managing your crawl budget as you scale.

Your Toolkit for Managing Crawl Budget in Webflow

The good news is that Webflow gives you all the tools you need to become an expert guide for Googlebot, pointing it directly to your best content. Here’s how to use them.

1. The robots.txt File: Your Site’s Bouncer

The robots.txt file is a simple text file that lives on your site and gives instructions to web crawlers. It tells them which pages or sections of your site they are not allowed to crawl. This is your first line of defense in preserving your crawl budget.

By blocking crawlers from low-value areas, you save your crawl budget for the pages that matter. In Webflow, you can edit this file directly in your Site Settings under the "SEO" tab.

Common pages to block in Webflow:

- Internal search results: Users generate these pages, creating near-infinite URLs with duplicate content. They offer no value to search engines.

- Utility pages: Pages like login screens, user profiles, or cart/checkout pages that shouldn't be in search results.

Here’s a sample robots.txt snippet you could use in Webflow to block the internal search path:

User-agent: *Disallow: /search

This simple command tells all crawlers not to waste time in your search results pages, preserving budget for your actual content.

2. noindex Tags: The Surgical Approach

While robots.txt prevents crawling, a noindex meta tag prevents indexing. This is a more surgical tool. You allow Google to crawl the page (and follow its links), but you explicitly tell it, "Don't put this specific page in your search results."

This is perfect for pages that need to exist for users but don't add SEO value, such as:

- Tag or category pages with thin or duplicate content.

- "Thank you" pages after a form submission.

- Author archives on a single-author blog.

In Webflow, you can add a noindex tag to any page in the Page Settings. For CMS Collection Pages, you can even use conditional logic to noindex items that don't meet certain criteria (e.g., a real estate listing that is marked as "Sold").

This level of control is crucial for large collections. If you have 5,000 blog posts and 2,000 of them are assigned to tags that only have one or two posts, you could be asking Google to index thousands of low-value tag pages. Using noindex on these pages cleans up your site's footprint in Google's index.

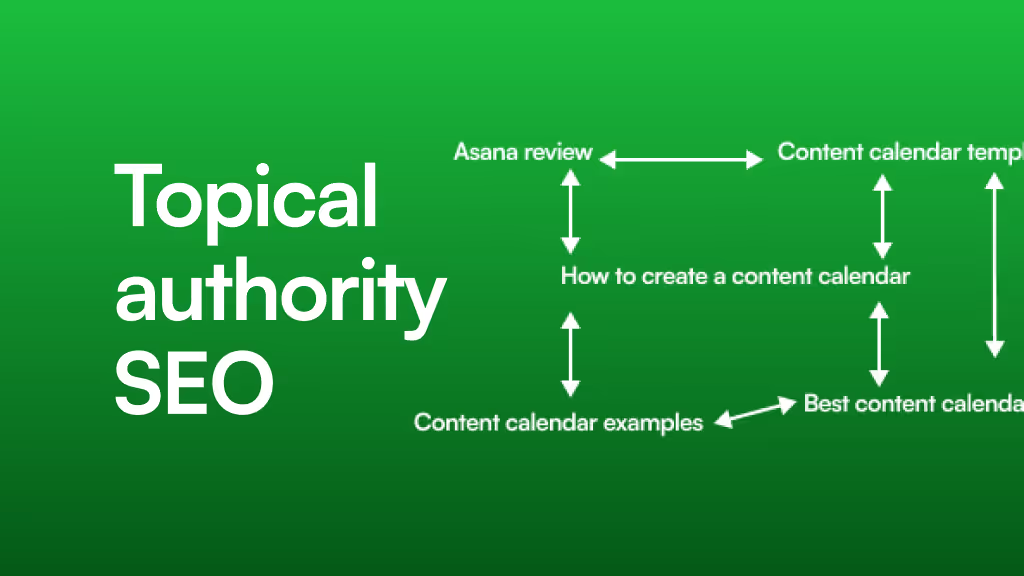

3. Internal Linking: Creating a Path to Your Best Content

How you link between your pages is one of the strongest signals you can send to Google about which pages are most important. Pages that receive many internal links from other important pages on your site are seen as more valuable.

For a large CMS collection, a flat structure where everything is just linked from a single archive page is inefficient. You need to create a logical hierarchy.

- Pillar Pages: Create comprehensive guides on your main topics.

- Topic Clusters: Link your individual blog posts or articles back to the main pillar page and to each other.

This structure does two things:

- It helps users discover more of your content.

- It creates a clear path for crawlers, funneling link equity and crawl priority to your most important pages.

A well-planned internal linking strategy is essential for any site, but for large-scale projects, it is the foundation of a successful SEO strategy. If you're building a new site or considering migrating existing websites to Webflow, planning this structure from the start is critical.

Advanced Challenges: Common Traps with Massive Webflow Sites

As your site grows, you’ll encounter more complex scenarios. Here are two common traps and how to navigate them.

The Faceted Navigation Trap

If you run an e-commerce store or a large marketplace with thousands of listings, you likely use filters (faceted navigation) to help users sort and find things—for example, filtering products by size, color, and price.

Each combination of these filters can create a new URL (e.g., /products?color=blue&size=large). Without proper management, this can generate millions of URL variations, creating a massive crawl budget trap. Googlebot will waste its entire budget crawling these near-identical pages.

The solution is to use canonical tags to tell Google which URL is the "master" version and to strategically use robots.txt to block crawlers from crawling certain filter combinations that don't offer unique value.

Pagination vs. "Load More" (The Finsweet Question)

How do you display thousands of CMS items? Native Webflow pagination is generally SEO-friendly. It creates distinct pages (/blog?page=2, /blog?page=3) that Google can crawl sequentially.

However, many sites use third-party solutions like Finsweet's CMS Load attributes to create infinite scroll or "load more" buttons. While this can be a great user experience, it can be tricky for SEO. If not implemented correctly, search engines may not be able to "click" the load more button and will never discover items beyond the first page.

It's crucial to ensure your "load more" solution still has fallback pagination links in the code that crawlers can follow. This gives you the best of both worlds: a dynamic user experience and a crawlable site structure. For complex projects, this is where expert Webflow development becomes invaluable to ensure both design and technical SEO are perfectly aligned.

Your Large-Scale Webflow SEO Checklist

Feeling overwhelmed? Use this scannable checklist to audit your site and ensure you're set up for success as you scale.

Frequently Asked Questions (FAQ)

Is Webflow good for SEO with a large number of CMS items?

Absolutely. Webflow provides a fantastic foundation with clean code, SSL, and responsive design out of the box. However, like any powerful tool, it requires a strategy. A large site's success depends less on the platform and more on how you manage crawl budget, indexing, and site structure—all of which are fully controllable within Webflow.

What's the fastest way to control what Google indexes on my Webflow site?

The fastest way to de-index a single page is to add a noindex tag in that page's settings and then use Google Search Console's "URL Inspection" tool to request re-indexing. For entire sections, using the robots.txt file to disallow crawling is the broadest and fastest method to prevent pages from being crawled and eventually indexed.

How does the 10,000 CMS item limit affect SEO?

The Webflow CMS limit (currently 10,000 items per collection on Business plans) is a content constraint, but it has indirect SEO implications. Hitting this limit might force you to split content across multiple collections or sites, which can complicate your site architecture. More importantly, managing a collection of even 5,000 items requires a proactive SEO strategy. The real challenge isn't the limit itself, but effectively managing the crawl budget for the thousands of pages you create before you hit the limit.

Does native Webflow pagination hurt my crawl budget?

No, when used correctly, it helps. Standard pagination creates a clear, logical path for search engine crawlers to follow to discover all of your CMS items. It's a predictable and efficient structure for them to process. The key is to ensure your paginated pages don't contain thin or duplicate content and are properly indexed.

Conquer Your Content, Don't Just Create It

Building a massive library of content is an incredible achievement. But creating content is only half the battle. Ensuring it gets discovered, crawled, and indexed by search engines is what turns that content into a powerful engine for growth.

By understanding the principles of crawl budget and using Webflow’s built-in SEO tools strategically, you can take control of how search engines see your site. You can guide them to your best work, protect your resources, and build a scalable foundation that supports your growth for years to come. For organizations that need a well-structured site from day one, services that promise website delivery within seven days can be a great option, but long-term success still requires diligent management and ongoing support to keep your technical SEO in check as you scale.